This post gives an introduction to understanding how to run a fixed throughput OLTP workload with HammerDB. In this example, we will use the CLI to run TPROC-C on a MariaDB database to illustrate the concepts.

Setting the bar with the default workload

Firstly, it is important to understand that the majority of users wanting to run an OLTP workload will use the default approach. This way is typically the fastest way to determine the maximum throughput of a database with minimal configuration, and over time has been proven to show the same performance ratios between systems as a well configured fixed throughput setup. Therefore, the first thing we will do is to run a default workload, using the sample scripts provided as shown.

set tmpdir $::env(TMP)

puts "SETTING CONFIGURATION"

dbset db maria

dbset bm TPC-C

diset connection maria_host localhost

diset connection maria_port 3306

diset connection maria_socket /tmp/mariadb.sock

diset tpcc maria_user root

diset tpcc maria_pass maria

diset tpcc maria_dbase tpcc

diset tpcc maria_driver timed

diset tpcc maria_rampup 2

diset tpcc maria_duration 5

diset tpcc maria_allwarehouse false

diset tpcc maria_timeprofile false

loadscript

puts "TEST STARTED"

vuset vu 80

vucreate

tcstart

tcstatus

set jobid [ vurun ]

vudestroy

tcstop

puts "TEST COMPLETE"

set of [ open $tmpdir/maria_tprocc w ]

puts $of $jobid

close $of

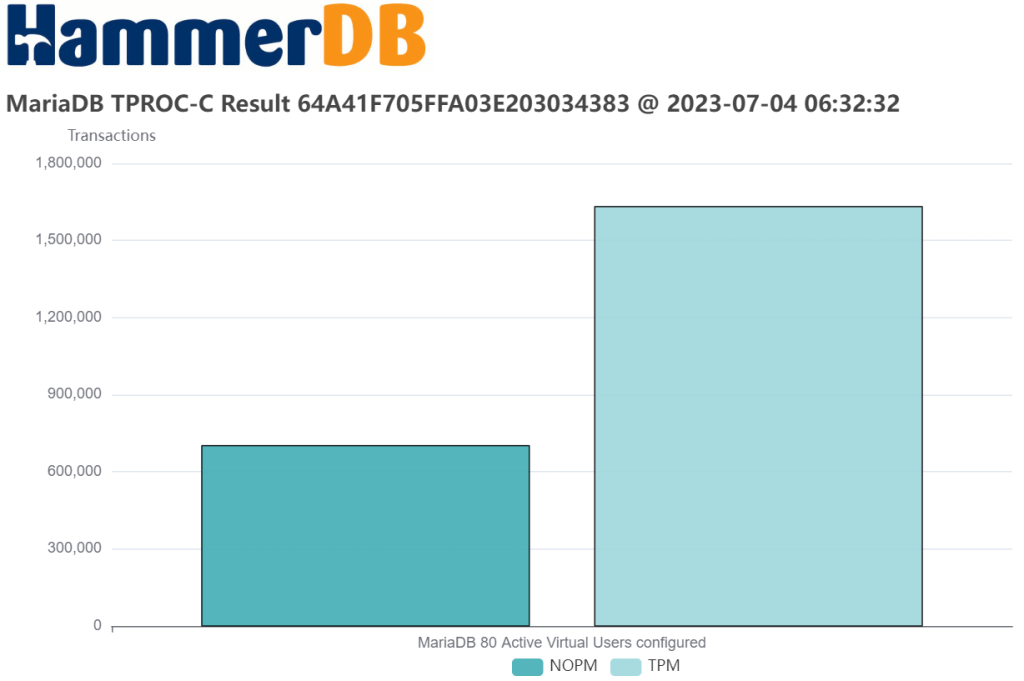

In this test, our MariaDB 10.10.1 database built with 1000 warehouses returned just over 700,000 NOPM illustrating the upper limit of the system and database combination we are testing.

Vuser 1:80 Active Virtual Users configured

Vuser 1:TEST RESULT : System achieved 701702 NOPM from 1631827 MariaDB TPM

Fixing throughput with keying and thinking time

The key concept to understanding fixed throughput is that of keying and thinking time. This setting is exactly how it sounds and simulates the delay a real user would have in inputting data i.e. keying time and reading the results returned i.e. thinking time. So as a first step, we will go ahead and set keying and thinking time for one virtual user and run the test for 2 minutes of rampup and 2 minutes of testing time.

diset tpcc maria_keyandthink true

When we run the test, we can see that our virtual user ran transactions at 1 NOPM (new order per minute) with most of the time now spent in keying and thinking time.

TEST STARTED

Vuser 1 created MONITOR - WAIT IDLE

Vuser 2 created - WAIT IDLE

2 Virtual Users Created with Monitor VU

Transaction Counter Started

Transaction Counter thread running with threadid:tid0x7f141b7fe700

Vuser 1:RUNNING

Vuser 1:Ssl_cipher

0 MariaDB tpm

Vuser 1:Beginning rampup time of 2 minutes

Vuser 2:RUNNING

Vuser 2:Ssl_cipher

Vuser 2:Processing 10000000 transactions with output suppressed...

0 MariaDB tpm

0 MariaDB tpm

0 MariaDB tpm

0 MariaDB tpm

6 MariaDB tpm

Vuser 1:Rampup 1 minutes complete ...

6 MariaDB tpm

0 MariaDB tpm

0 MariaDB tpm

12 MariaDB tpm

6 MariaDB tpm

6 MariaDB tpm

Vuser 1:Rampup 2 minutes complete ...

Vuser 1:Rampup complete, Taking start Transaction Count.

Vuser 1:Timing test period of 2 in minutes

0 MariaDB tpm

6 MariaDB tpm

0 MariaDB tpm

0 MariaDB tpm

6 MariaDB tpm

12 MariaDB tpm

Vuser 1:1 ...,

6 MariaDB tpm

6 MariaDB tpm

0 MariaDB tpm

0 MariaDB tpm

0 MariaDB tpm

6 MariaDB tpm

Vuser 1:2 ...,

Vuser 1:Test complete, Taking end Transaction Count.

Vuser 1:1 Active Virtual Users configured

Vuser 1:TEST RESULT : System achieved 1 NOPM from 3 MariaDB TPM

Vuser 1:FINISHED SUCCESS

Now, let’s see what happens if we increase the number of virtual users to 10 and 100. Again, each virtual user we add is running at approximately 1 NOPM.

Vuser 1:10 Active Virtual Users configured

Vuser 1:TEST RESULT : System achieved 14 NOPM from 29 MariaDB TPM

Vuser 1:100 Active Virtual Users configured

Vuser 1:TEST RESULT : System achieved 117 NOPM from 284 MariaDB TPM

So what we have by adding keying and thinking time is a fixed throughput workload. Each virtual user we add is going to add approximately 1 NOPM, so we know exactly the configuration we need to test a particular level of throughput up to the limits of the system we found with the default workload.

Maximum Throughput

So far we have seen throughput of ‘approximately’ 1 NOPM per virtual user. However, the HammerDB TPROC-C workload is derived from the TPC-C specification using the same keying and thinking times with the specification determining maximum throughput as follows:

The maximum throughput is achieved with infinitely fast transactions resulting in a null response time and minimum required wait times. The intent of this clause is to prevent reporting a throughput that exceeds this maximum, which is computed to be 12.86 tpmC per warehouse.

In the TPC-C specification, each warehouse has 10 connections and therefore with HammerDB derived workload this enables us to use 1.28 NOPM per virtual user as a guide to our fixed throughput.

Asynchronous Scaling

Now, this guide of 1.28 NOPM per virtual user presents a challenge if we want to scale numerous virtual users to drive meaningful throughput. In particular, if these virtual users are spending most of their time waiting for keying or thinking time. For this reason, HammerDB implements asynchronous scaling whereby each virtual user can make multiple connections to the database and run the transactions for these connections when they wake from their keying and thinking time. We have covered in many blog posts before the concept of parallelism vs concurrency, e.g.

Why Tcl is 700% faster than Python for database benchmarking

Therefore, it should be clear that the virtual users are operating in parallel and the asynchronous connections concurrently. This way, we can scale HammerDB fixed throughput workloads to thousands of connections by setting the async_scale and async_client options. Note that keyandthink must be enabled as it is the keying and thinking time that is managed asynchronously.

diset tpcc maria_async_scale true

diset tpcc maria_async_client 10

So let’s re-run with 1 virtual user but this time with 10 asynchronous clients. We can see that virtual user now makes 10 connections and we get the 12 NOPM we are expecting.

TEST STARTED

Vuser 1 created MONITOR - WAIT IDLE

Vuser 2 created - WAIT IDLE

2 Virtual Users Created with Monitor VU

Transaction Counter Started

Transaction Counter thread running with threadid:tid0x7f1ce2b97700

Vuser 1:RUNNING

Vuser 1:Ssl_cipher

0 MariaDB tpm

Vuser 1:Beginning rampup time of 2 minutes

Vuser 2:RUNNING

Vuser 2:Started asynchronous clients:vuser2:ac1 vuser2:ac2 vuser2:ac3 vuser2:ac4 vuser2:ac5 vuser2:ac6 vuser2:ac7 vuser2:ac8 vuser2:ac9 vuser2:ac10

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

Vuser 2:Processing 10000000 transactions with output suppressed...

...

Vuser 1:Test complete, Taking end Transaction Count.

Vuser 1:1 VU * 10 AC = 10 Active Sessions configured

Vuser 1:TEST RESULT : System achieved 12 NOPM from 31 MariaDB TPM

Vuser 1:FINISHED SUCCESS

Now if we ramp up the connections to 100 asynchronous clients, we get 128 NOPM.

Vuser 2:RUNNING

Vuser 2:Started asynchronous clients:vuser2:ac1 vuser2:ac2 vuser2:ac3 vuser2:ac4 vuser2:ac5 vuser2:ac6 vuser2:ac7 vuser2:ac8 vuser2:ac9 vuser2:ac10 vuser2:ac11 vuser2:ac12 vuser2:ac13 vuser2:ac14 vuser2:ac15 vuser2:ac16 vuser2:ac17 vuser2:ac18 vuser2:ac19 vuser2:ac20 vuser2:ac21 vuser2:ac22 vuser2:ac23 vuser2:ac24 vuser2:ac25 vuser2:ac26 vuser2:ac27 vuser2:ac28 vuser2:ac29 vuser2:ac30 vuser2:ac31 vuser2:ac32 vuser2:ac33 vuser2:ac34 vuser2:ac35 vuser2:ac36 vuser2:ac37 vuser2:ac38 vuser2:ac39 vuser2:ac40 vuser2:ac41 vuser2:ac42 vuser2:ac43 vuser2:ac44 vuser2:ac45 vuser2:ac46 vuser2:ac47 vuser2:ac48 vuser2:ac49 vuser2:ac50 vuser2:ac51 vuser2:ac52 vuser2:ac53 vuser2:ac54 vuser2:ac55 vuser2:ac56 vuser2:ac57 vuser2:ac58 vuser2:ac59 vuser2:ac60 vuser2:ac61 vuser2:ac62 vuser2:ac63 vuser2:ac64 vuser2:ac65 vuser2:ac66 vuser2:ac67 vuser2:ac68 vuser2:ac69 vuser2:ac70 vuser2:ac71 vuser2:ac72 vuser2:ac73 vuser2:ac74 vuser2:ac75 vuser2:ac76 vuser2:ac77 vuser2:ac78 vuser2:ac79 vuser2:ac80 vuser2:ac81 vuser2:ac82 vuser2:ac83 vuser2:ac84 vuser2:ac85 vuser2:ac86 vuser2:ac87 vuser2:ac88 vuser2:ac89 vuser2:ac90 vuser2:ac91 vuser2:ac92 vuser2:ac93 vuser2:ac94 vuser2:ac95 vuser2:ac96 vuser2:ac97 vuser2:ac98 vuser2:ac99 vuser2:ac100

...

Vuser 1:Test complete, Taking end Transaction Count.

Vuser 1:1 VU * 100 AC = 100 Active Sessions configured

Vuser 1:TEST RESULT : System achieved 128 NOPM from 286 MariaDB TPM

Vuser 1:FINISHED SUCCESS

and 10,000 connections gives us 12,612 NOPM, which is what we would expect from our fixed throughput configuration.

mysql> show processlist

...

10003 rows in set (0.01 sec)

...

Vuser 1:Test complete, Taking end Transaction Count.

Vuser 1:100 VU * 100 AC = 10000 Active Sessions configured

Vuser 1:TEST RESULT : System achieved 12612 NOPM from 29201 MariaDB TPM

Remember that you should also increase the rampup time to allow enough time for all of the asynchronous clients to connect and reached our fixed throughput rate.

Modifying the keying and thinking time

If you want to modify the keying and thinking time you can do that by modifying the script you are running. Firstly run the savescript command.

hammerdb>savescript fixed.tcl

Success ... wrote script to /home/HammerDB-4.8/TMP/fixed.tcl

and then edit the file. Towards the end of the file you can see the section where transaction selection takes place and the implementation of keying and thinking time.

set choice [ RandomNumber 1 23 ]

if {$choice <= 10} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:neword" }

if { $KEYANDTHINK } { async_keytime 18 $clientname neword $async_verbose }

neword $maria_handler $w_id $w_id_input $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 12 $clientname neword $async_verbose }

} elseif {$choice <= 20} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:payment" }

if { $KEYANDTHINK } { async_keytime 3 $clientname payment $async_verbose }

payment $maria_handler $w_id $w_id_input $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 12 $clientname payment $async_verbose }

} elseif {$choice <= 21} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:delivery" }

if { $KEYANDTHINK } { async_keytime 2 $clientname delivery $async_verbose }

delivery $maria_handler $w_id $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 10 $clientname delivery $async_verbose }

} elseif {$choice <= 22} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:slev" }

if { $KEYANDTHINK } { async_keytime 2 $clientname slev $async_verbose }

slev $maria_handler $w_id $stock_level_d_id $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 5 $clientname slev $async_verbose }

} elseif {$choice <= 23} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:ostat" }

if { $KEYANDTHINK } { async_keytime 2 $clientname ostat $async_verbose }

ostat $maria_handler $w_id $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 5 $clientname ostat $async_verbose }

}

So, we will roughly halve the keying and thinking time (the values must be whole numbers) and save the file.

set choice [ RandomNumber 1 23 ]

if {$choice <= 10} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:neword" }

if { $KEYANDTHINK } { async_keytime 9 $clientname neword $async_verbose }

neword $maria_handler $w_id $w_id_input $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 6 $clientname neword $async_verbose }

} elseif {$choice <= 20} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:payment" }

if { $KEYANDTHINK } { async_keytime 2 $clientname payment $async_verbose }

payment $maria_handler $w_id $w_id_input $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 6 $clientname payment $async_verbose }

} elseif {$choice <= 21} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:delivery" }

if { $KEYANDTHINK } { async_keytime 1 $clientname delivery $async_verbose }

delivery $maria_handler $w_id $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 5 $clientname delivery $async_verbose }

} elseif {$choice <= 22} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:slev" }

if { $KEYANDTHINK } { async_keytime 1 $clientname slev $async_verbose }

slev $maria_handler $w_id $stock_level_d_id $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 3 $clientname slev $async_verbose }

} elseif {$choice <= 23} {

if { $async_verbose } { puts "$clientname:w_id:$w_id:ostat" }

if { $KEYANDTHINK } { async_keytime 1 $clientname ostat $async_verbose }

ostat $maria_handler $w_id $prepare $RAISEERROR $clientname

if { $KEYANDTHINK } { async_thinktime 3 $clientname ostat $async_verbose }

}

Now, we can use customscript to load the modified workload and custommonitor to configure the additional monitor virtual user shown in this excerpt.

#loadscript

customscript /home/HammerDB-4.8/TMP/fixed.tcl

custommonitor timed

puts "TEST STARTED"

vuset vu 100

Note, that with a customscript the values you have configured for the asynchronous clients will now be set in this script and not loaded dynamically.

set async_client 100;# Number of asynchronous clients per Vuser

set async_verbose false;# Report activity of asynchronous clients

set async_delay 1000;# Delay in ms between logins of asynchronous clients

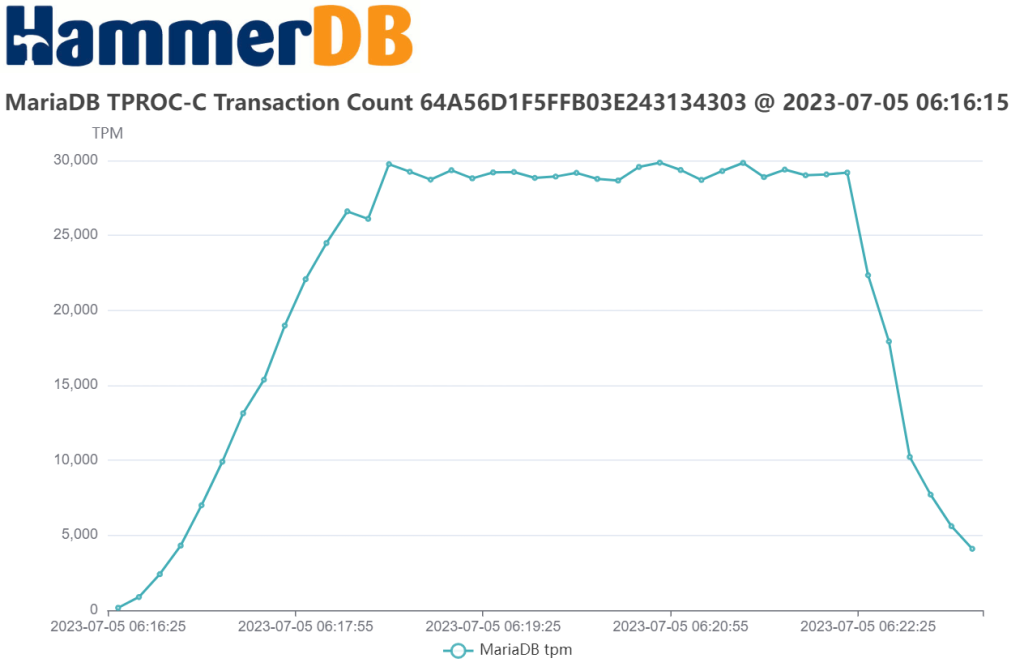

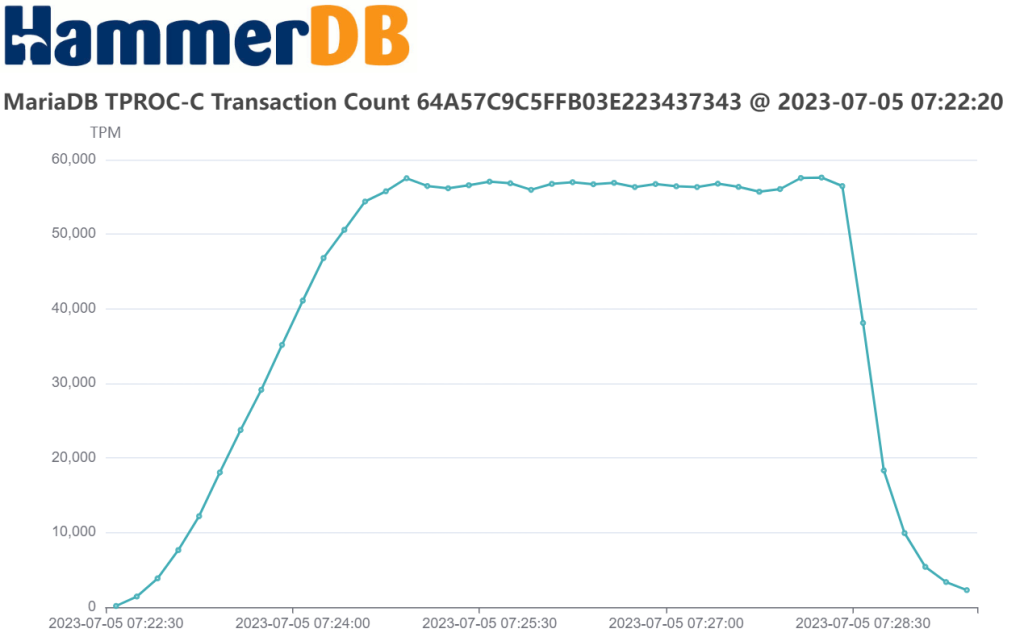

So now we have halved the keying and thinking time we have doubled the throughput.

Vuser 1:Test complete, Taking end Transaction Count.

Vuser 1:100 VU * 100 AC = 10000 Active Sessions configured

Vuser 1:TEST RESULT : System achieved 24310 NOPM from 56630 MariaDB TPM

Vuser 1:FINISHED SUCCESS

You should not take the keying and thinking time to minimal values as remember a single virtual user is servicing the requests from all of their configured asynchronous connections.

Using middleware

We have illustrated the concept of a fixed throughput workload up to 10,000 connections and if you have followed the examples you will have already needed to increase system limits such as open files and database limits such as max connections. Nevertheless the maximum setting for the number of connections for MariaDB is 100,000 still short of our 700,000 default workload. For this reason when configured such a fixed throughput workload you will need to configure middleware such as maxscale or proxysql in our MariaDB example. Typically you will have actual database connections numbered in the low hundreds with HammerDB connecting to the proxy instead. Additionally you can configure HammerDB for remote primary and replica modes connecting multiple instances of HammerDB using asynchronous clients to scale up to hundreds of thousands of connections needed to drive your system to the maximum throughput achieved with the default workload.