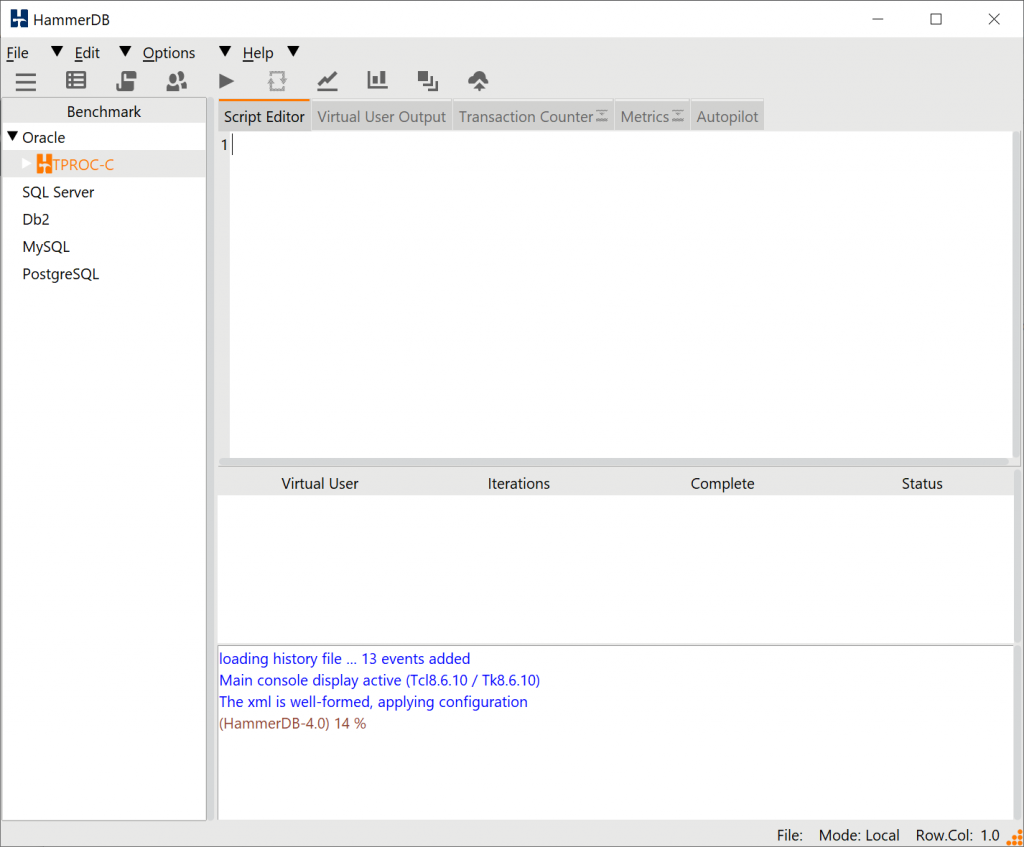

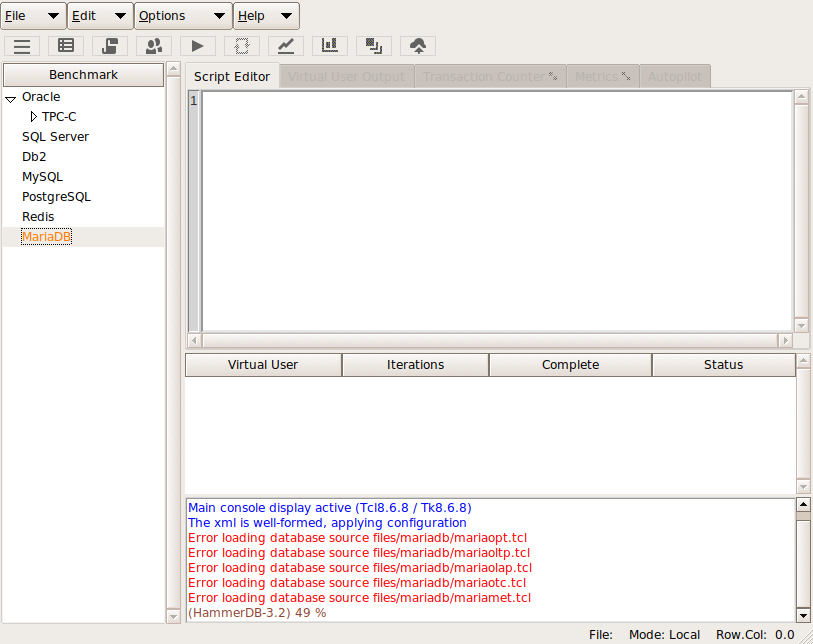

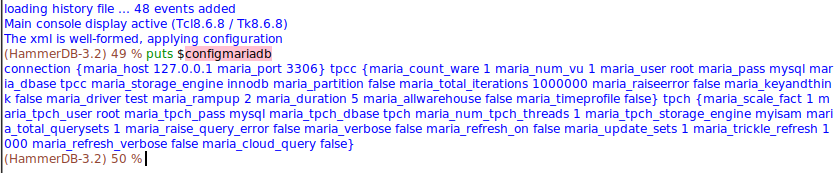

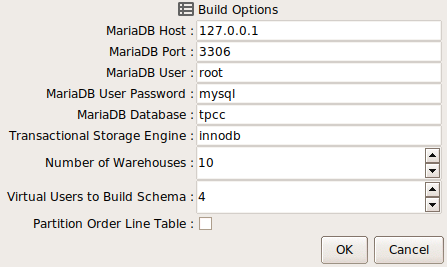

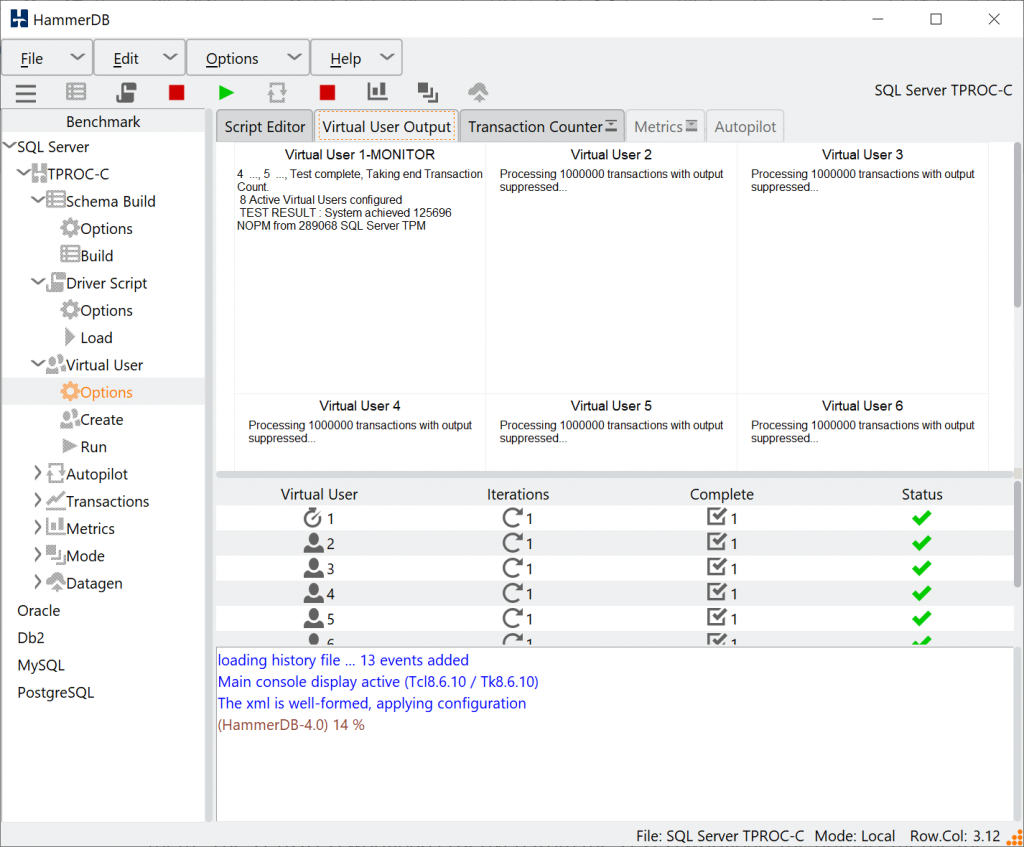

Another key feature introduced with HammerDB v4.0 is the refactoring of the stored procedures for some of the TPROC-C workloads. This means that the performance metrics reported in NOPM/TPM could be different from previous releases as well as the ratio between NOPM and TPM for these workloads. Therefore results from v4.0 may not be directly comparable with the results from previous releases for your database. The reason for these changes is that over time for some databases more advanced features or drivers have been introduced that improve performance since the time that the original HammerDB transactions or interfaces were written. These changes were contributed and accepted into HammerDB v4.0.

NOPM vs TPM

Firstly it is important to understand the metrics NOPM, TPM and the difference between them. The TPROC-C workload is derived from the TPC-C workload, the primary metric for TPC-C is called tpmC, the number of new order transactions processed per minute. As HammerDB TPROC-C is a derived workload it is not permitted to use tpmC and therefore instead uses a metric called NOPM that records the number of new order transactions processed per minute. Although a similar metric to tpmC it is not considered to be directly comparable. From HammerDB v4.0 NOPM should be considered the primary metric and is the only one that should be used for a cross database comparison. For this reason from HammerDB v4.0 NOPM is printed first.

However for backward compatibility the generic.xml configuration file contains an option called first_result, setting this to TPM will print the results in the same format as v3.3 and earlier.

<benchmark> ... <first_result>TPM</first_result> ... </benchmark>

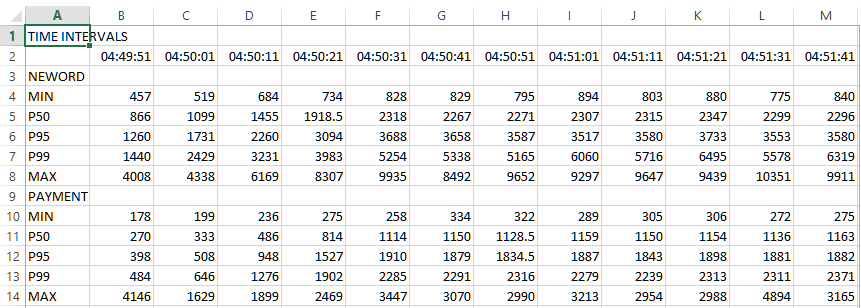

So why not just print NOPM and report a single metric for TPROC-C as per the official TPC-C workloads? The key reason is that HammerDB is intended to give us more insights into database performance and whereas NOPM is a cross-database comparable metric TPM is a database specific metric that can give more details on the workload and be related to other database specific metrics but cannot be compared between different databases. For example for the Oracle database the TPM value is calculated from the number of user commits + user rollbacks and you can query these metrics in the v$sysstat table during the test. This metric is the same used in Oracle tools such as AWR reports and as shown the TPM metric captured by HammerDB is exactly the same as Transactions and Rollbacks in the Load Profile section when these values are multiplied by a value of 60. This can then be used to compare with other Oracle specific metrics such as redo size and logical reads.

Load Profile

| Per Second | Per Transaction | Per Exec | Per Call | |

| DB Time(s): | 151.2 | 0.0 | 0.00 | 0.00 |

| DB CPU(s): | 124.9 | 0.0 | 0.00 | 0.00 |

| Background CPU(s): | 0.0 | 0.0 | 0.00 | 0.00 |

| Redo size (bytes): | 862,578,321.3 | 5,390.2 | ||

| Logical read (blocks): | 10,369,967.8 | 64.8 | ||

| Block changes: | 5,009,042.8 | 31.3 | ||

| Physical read (blocks): | 498.8 | 0.0 | ||

| Physical write (blocks): | 23.6 | 0.0 | ||

| Read IO requests: | 329.7 | 0.0 | ||

| Write IO requests: | 2.2 | 0.0 | ||

| Read IO (MB): | 6.8 | 0.0 | ||

| Write IO (MB): | 0.2 | 0.0 | ||

| IM scan rows: | 0.0 | 0.0 | ||

| Session Logical Read IM: | 0.0 | 0.0 | ||

| User calls: | 122,685.1 | 0.8 | ||

| Parses (SQL): | 74,085.2 | 0.5 | ||

| Hard parses (SQL): | 0.0 | 0.0 | ||

| SQL Work Area (MB): | 155.9 | 0.0 | ||

| Logons: | 0.0 | 0.0 | ||

| User logons: | 0.0 | 0.0 | ||

| Executes (SQL): | 3,303,622.1 | 20.6 | ||

| Rollbacks: | 270.4 | 0.0 | ||

| Transactions: | 160,026.2 |

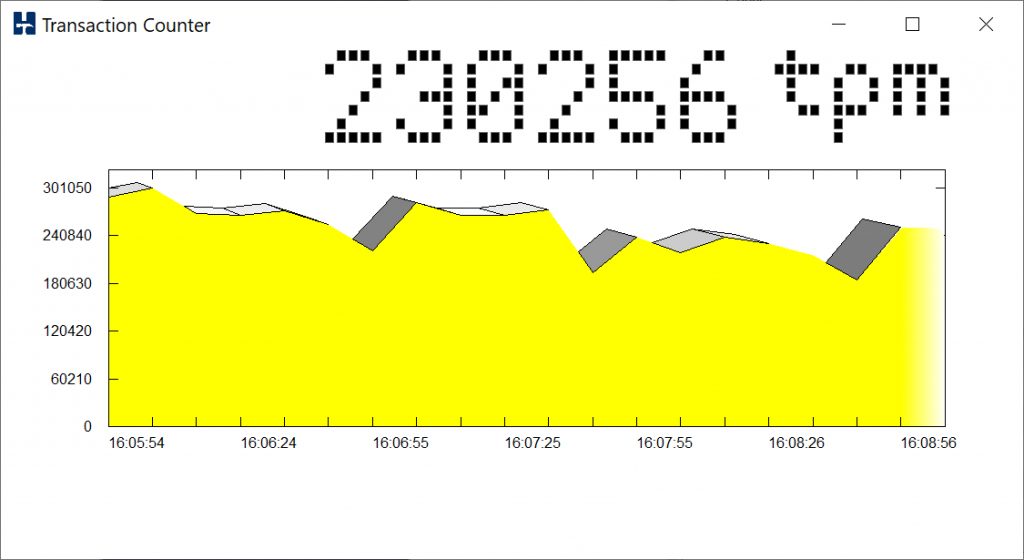

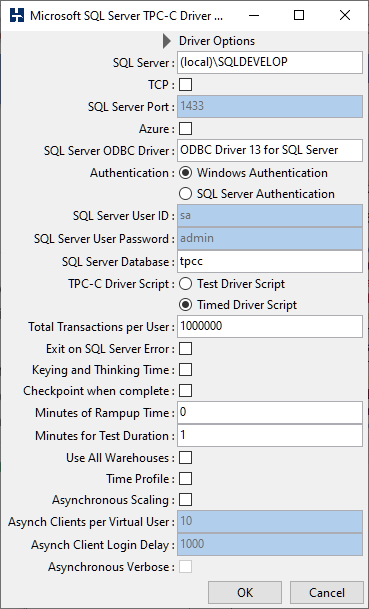

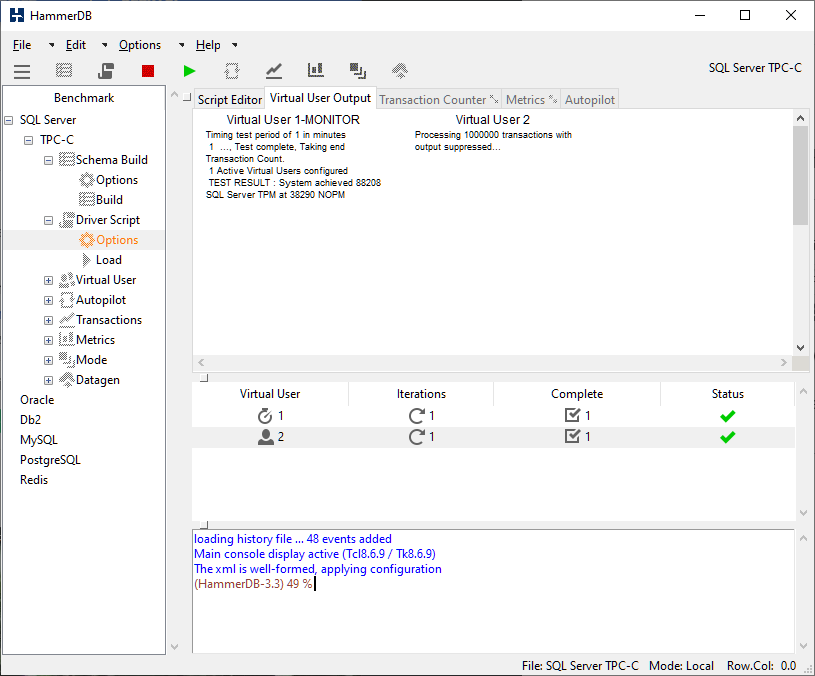

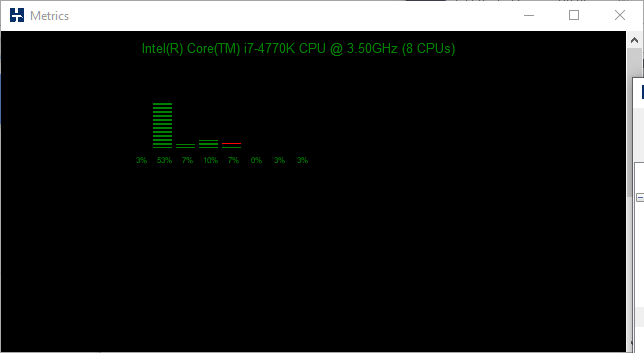

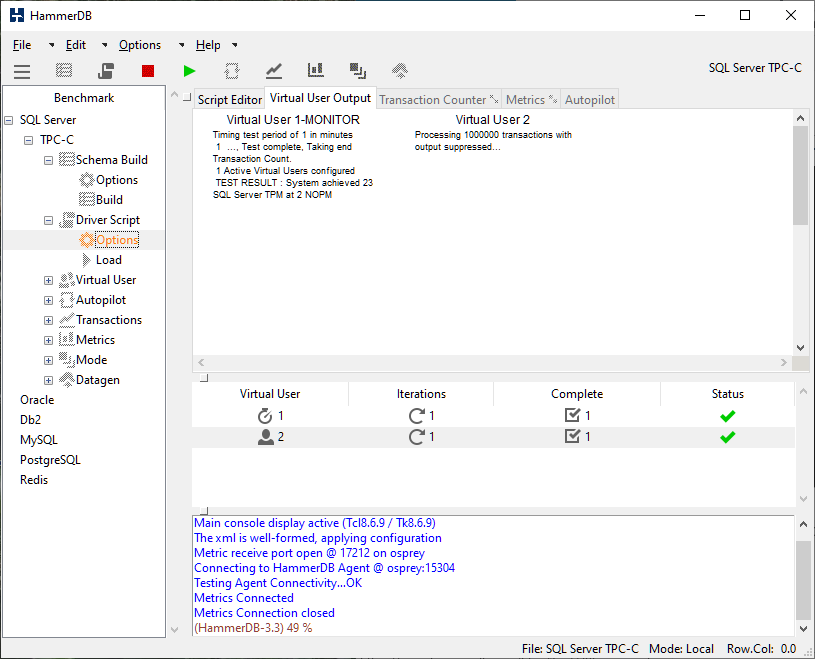

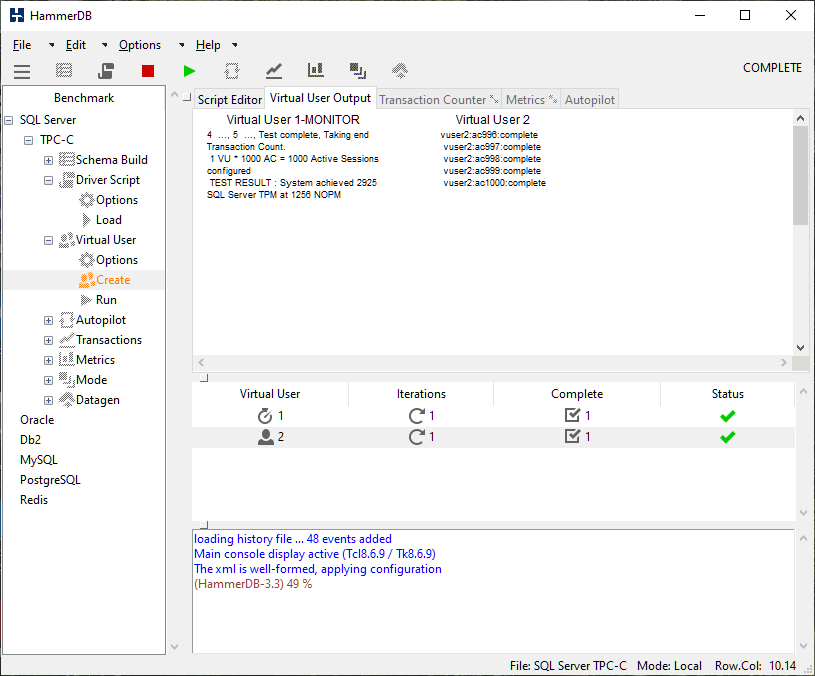

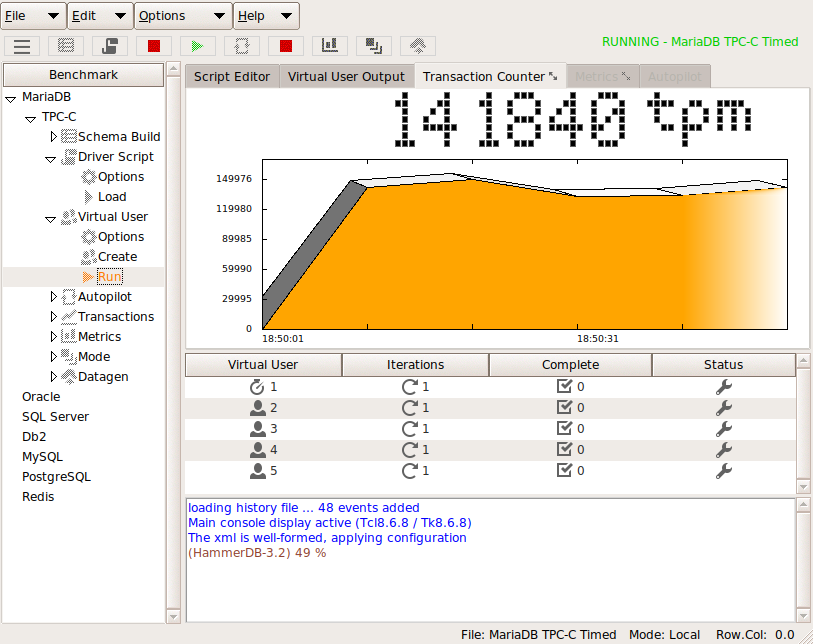

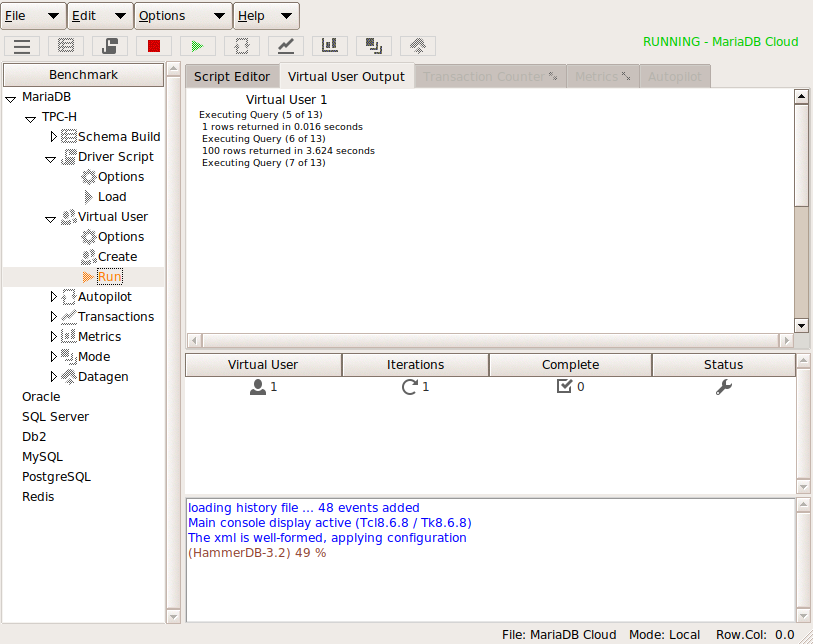

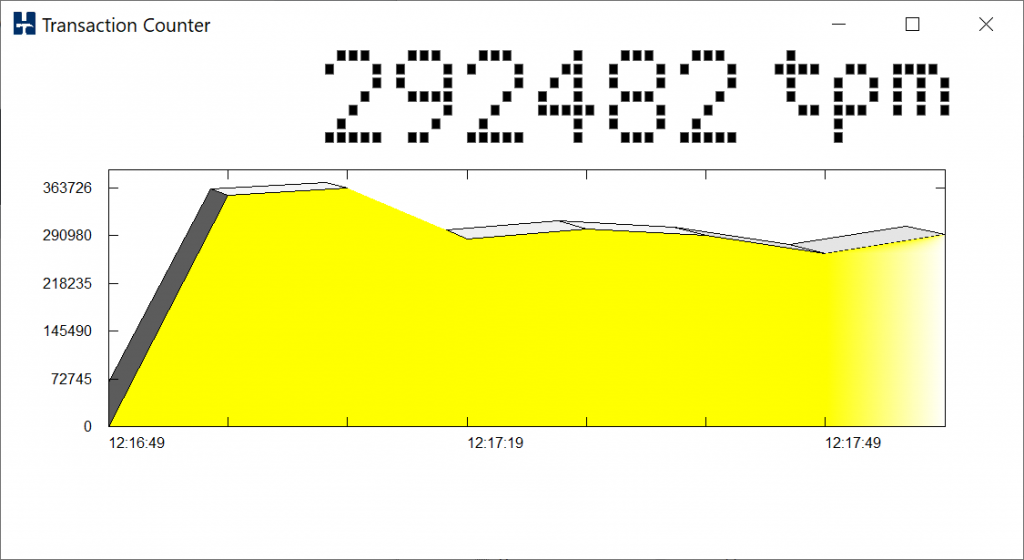

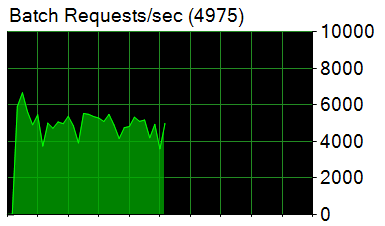

Similarly for SQL Server TPM records Batches/sec which is the same value seen in the Activity Monitor in SSMS. Using the database specific metric means that we can sample the transaction rate in real time for the HammerDB transaction counter whereas doing so for the NOPM value would impact the test by reading from a table being modified during the test. It can be seen from the example below that the HammerDB Transaction Counter

reports the same data per minute as Activity Monitor reports per second and can therefore be related directly to the other data that Activity Monitor provides such as Database I/O.

Refactoring Differences

When using HammerDB v4.0 the notable differences between TPM and NOPM rates than can be observed are as follows:

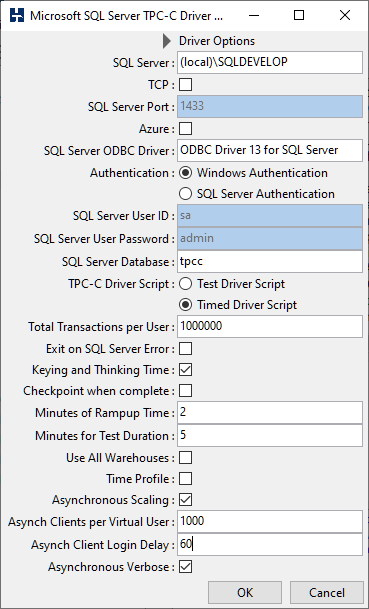

SQL Server

For SQL Server note that a change in the TPM metric was introduced at v3.3 and continues into v4.0. Initially these releases were planned close together however v4.0 was delayed to introduce UHD scalable graphics. The key difference is that in v3.2 the TPM value is reported as 2X the value of v4.0 (and v3.3) due to a change in the SQL Server driver interface used. The reason for the change was that there was a bug raised for the previous ODBC v1 interface tclodbc the bug meant that the 3rd party library would crash if SQL Server was shutdown during a test and then the Virtual Users subsequently closed after they had correctly reported the error. The fix to this was to move to a newer ODBCv3 interface called tdbc::odbc. The previous tclodbc interface required 2 commits per transaction one in the stored procedure and one outside in the driver script. If the external one was removed or commented then the driver would error saying that the connection was already in use by a previous command. Moving to the newer interface this external commit was no longer needed and was removed, as a result the TPM value has reduced however the NOPM value is exactly the same. (From tests it should be slightly higher with the newer ODBC v3 interface). It is important to note that as far as the HammerDB TPROC-C workload is concerned it is not doing any more work as such – just doing one less commit for the same work. If you compare the driver scripts for the different versions you can observe where the extra commit that was removed. For comparisons with previous releases NOPM can be used unmodified as the primary metric or the TPM value multiplied by 2X to account for the removed commit.

Oracle

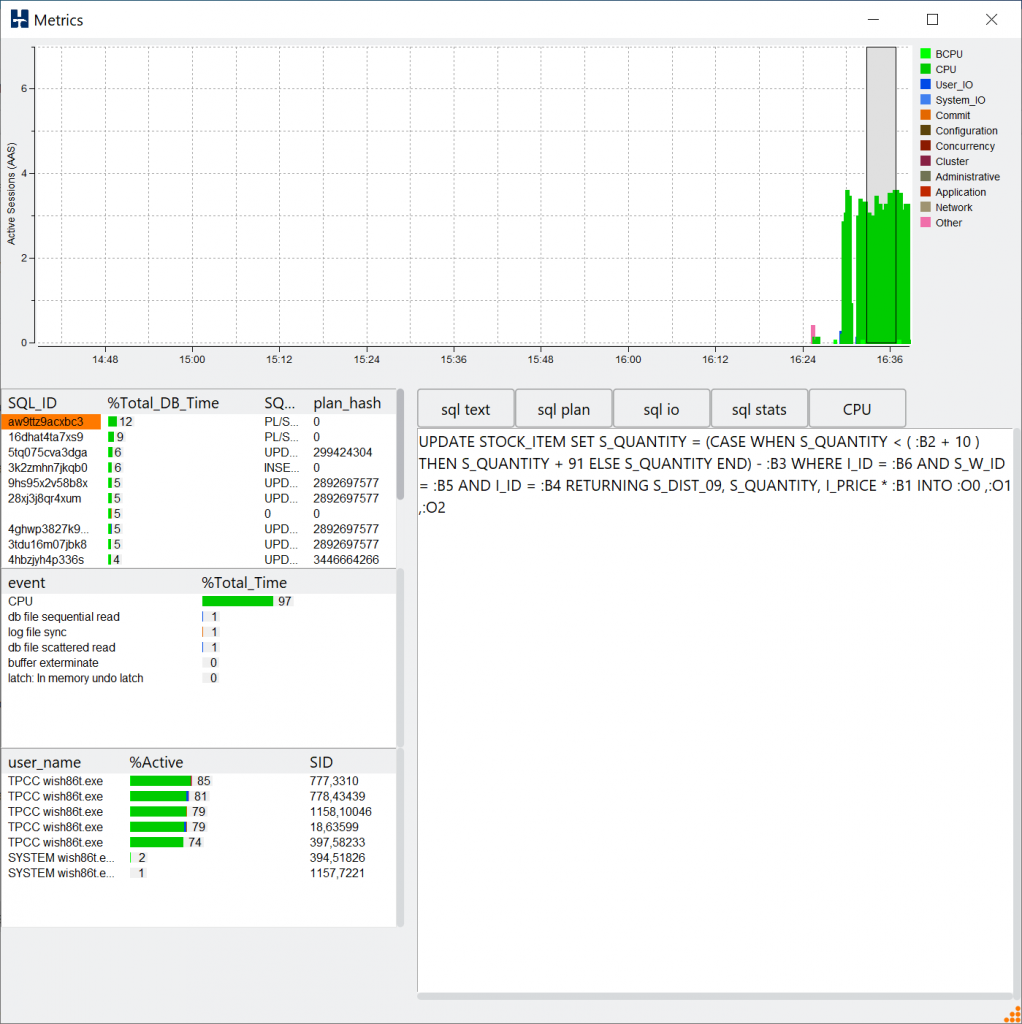

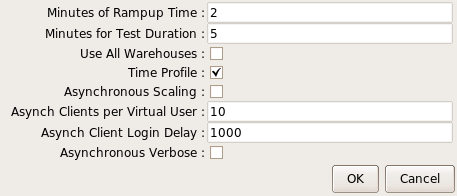

For Oracle from v4.0 the stored procedures have been updated to use bulk processing features. As we are now doing bulk operations we are processing more changes per database commit and this can seen in an AWR report that the redo generated per transaction has also increased from v3.3 to v4.0. For this reason the NOPM value has increased whilst the TPM value has remained level, therefore the TPM to NOPM ratio has changed for Oracle between these releases. This has been measured at 3.0X TPM to NOPM for v3.3 and earlier but has reduced to 2.11X for v4.0. Therefore HammerDB v4.0 improves the Oracle performance by approximately 1.35-1.45X measured in NOPM for the same TPM. This improvement in NOPM is a recognition of actual improved TPROC-C transactional throughput by using advanced PL/SQL features.

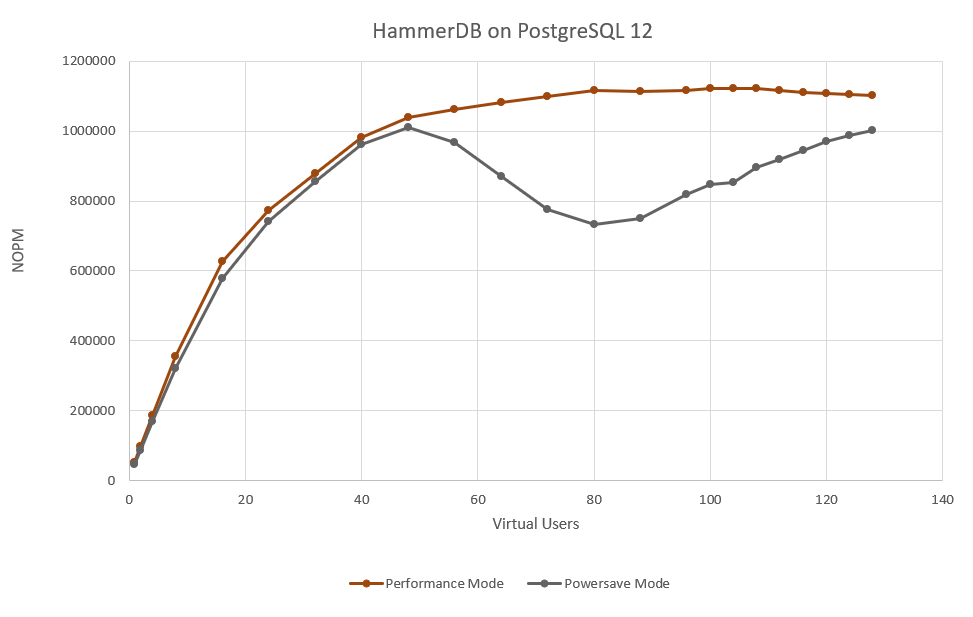

PostgreSQL

The PostgreSQL stored procedures and functions have undergone similar changes as Oracle to take advantage of bulk processing features. These changes have improved NOPM and TPM by approximately 1.35X in HammerDB v4.0 compared to previous releases and is also a recognition of the use of more advanced features.

In conclusion in v4.0 HammerDB has undergone a number of evolutionary changes for some of the database workloads that impact performance results when compared to earlier versions. These differences should be accounted for when comparing results. Although the aim is to keep performance as consistent as possible between releases wherever possible as some databases had introduced new features or newer more advanced interfaces were available it was considered reasonable to modify the stored procedures or use these new interfaces to use available features even though reported results are different in the newer version.

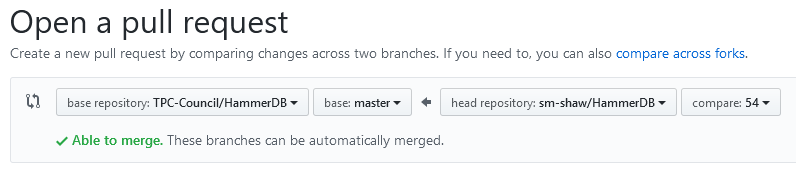

It is also important to note that HammerDB is open source and therefore if it is considered that there are underused features in any of the supported databases then a pull request can be made to ensure that those features are fairly represented in the results reported by HammerDB. The overall goal is to remain consistent as possible whilst providing an accurate representation of the capabilities that each supported database provides as their features are updated and improved.